From Sound to Word

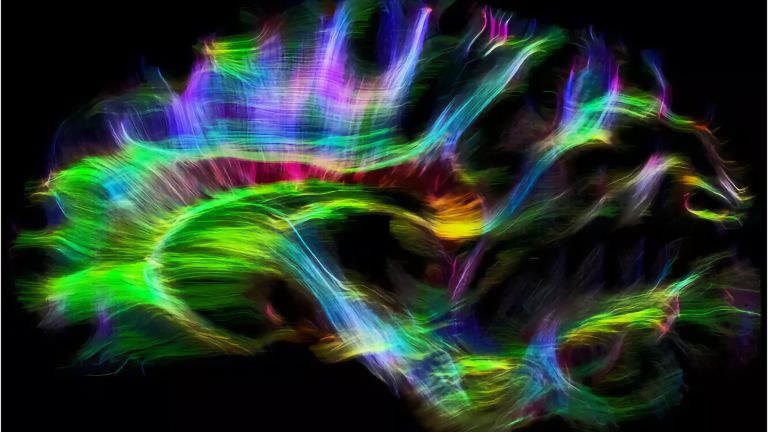

The ability to produce and understand language makes humans unique in evolution. Brain research has shown that areas in and below the cerebral cortex are involved in language processing.

Wissenschaftliche Betreuung: Prof. Dr. Katharina von Kriegstein

Veröffentlicht: 13.10.2023

Niveau: mittel

- Einzelne Gruppen von Neuronen reagieren sensibel auf die Phoneme der menschlichen Sprache.

- In der Großhirnrinde sind Teile des Frontal- und Temporallappens für das Sprachverstehen und die Sprachproduktion wichtig.

- Sprache kann das Gehirn unter anderem anhand charakteristischer Frequenzen von anderen Geräuschen unterscheiden.

Das Telefon klingelt, am anderen Ende erklingt nur ein knappes „Hallo“ – trotzdem ist es kein Problem, den Ehemann oder gar den Chef an seiner Stimme zu erkennen. Manchen Menschen jedoch fehlt diese Fähigkeit, die Besonderheiten einer Stimme zu erkennen und einer bekannten Person zuzuordnen. Diese als Phonagnosie bezeichnete Störung könnte mit einer Schädigung von Regionen wie dem rechten Temporallappen zusammenhängen, die selektiv Stimmen zu verarbeiten scheinen. Bis vor kurzem wurde Phonagnosie lediglich als Folge von Schlaganfällen beobachtet. Doch 2009 entdeckten Forscher des University College London bei einer 60jährigen Patientin, „KH“ genannt, auch eine möglicherweise angeborene Form. Anatomische Defekte ließen sich bei der Patientin nicht feststellen. „KH“ kann weder unbekannte Stimmen lernen noch Stimmen von ihr bekannten Personen wiedererkennen.

There are only two sounds, both identical and incredibly simple – but when the expectant listener hears their offspring babble something like “Pa-Pa” for the first time, it triggers a fireworks display of emotions. The little one is hugged, cuddled, and proudly shown off. Touching, but also a reaction to a – pardon me! – rather random event. After all, the child has surely tried out similar syllables countless times before, such as “ba,” “ma,” or “da”.

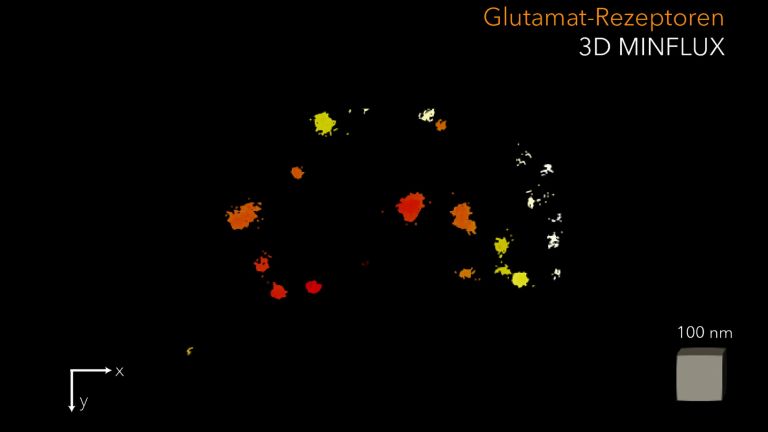

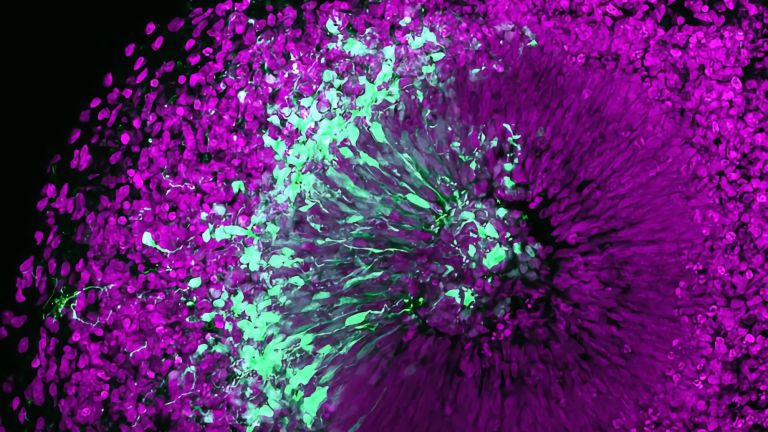

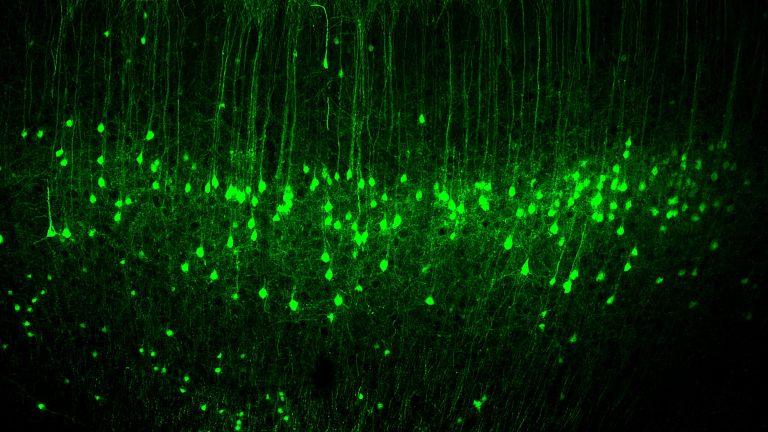

Similar syllables such as “ba” and ‘pa’ differ only in what linguists refer to as the most elementary linguistic unit, the distinctive features.” Even in the brains of monkeys, researchers have been able to identify nerve cells that respond sensitively to such phoneme-distinguishing features of an acoustic stimulus, i.e., they respond to a sound like “ba” but not to “pa.” In humans, it is probably individual groups of neurons that are able to recognize the approximately 100 phonemes of the linguistic alphabet from which all known languages can be composed.

But human language is more than just recognizing successive phonemes. It is not for nothing that it is only when the baby says “Pa” twice that the father melts, because only then does it become recognizable as meaningful. Even if the offspring did not mean this bearded guy with his babbling, the proud father's brain interprets a deep meaning into these two syllables. Just as the moody, reproachful “Dad” of the later teenager will take on a completely different meaning. And once the child starts speaking in coherent sentences, Dad is recognized grammatically as sometimes the subject and sometimes the object.

Central language areas: syntax and semantics

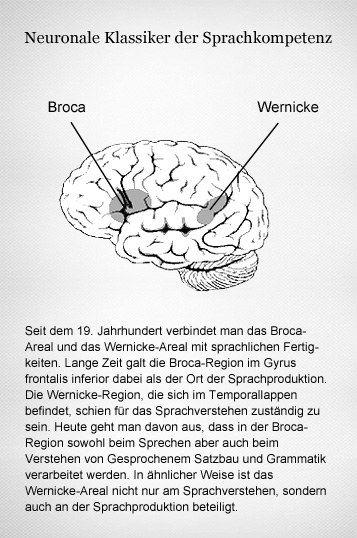

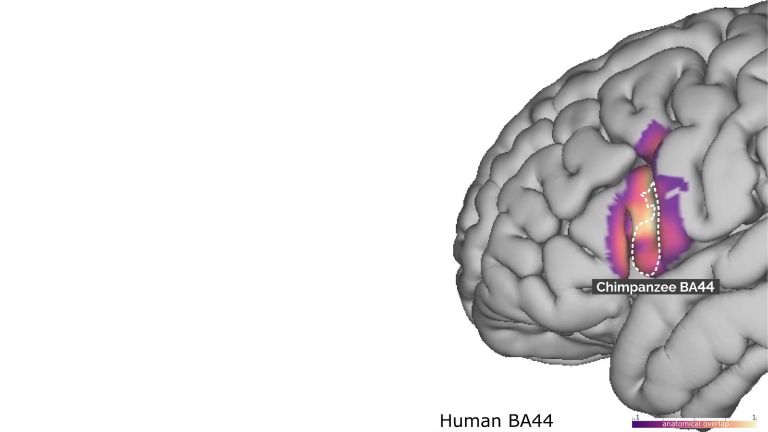

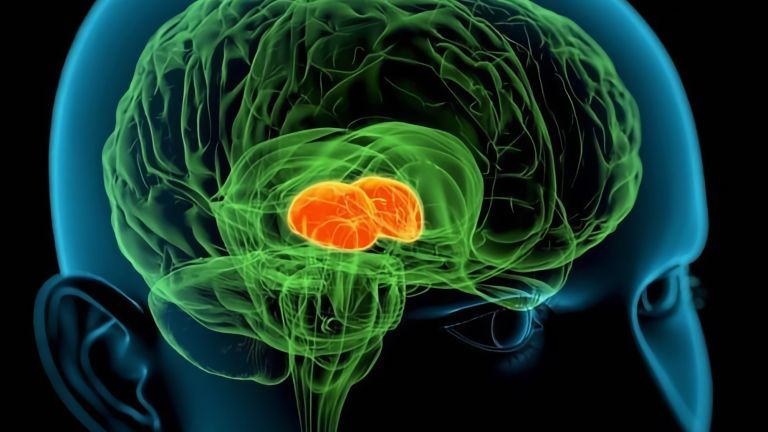

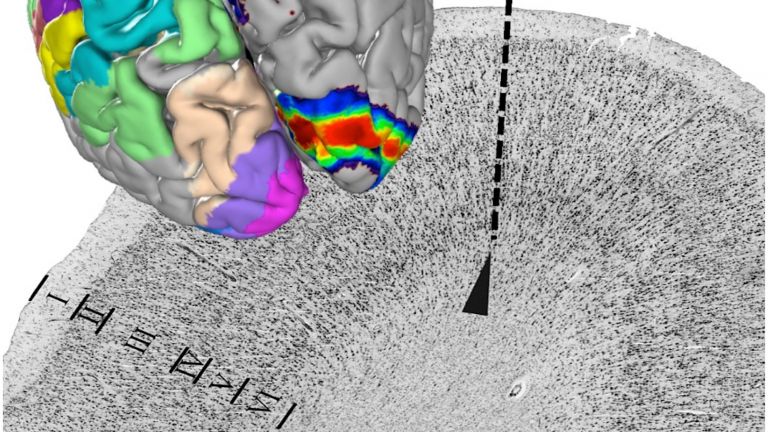

Until the 1970s, the Broca's area in the inferior frontal gyrus and the Wernicke's area, which is usually located in the left temporal lobe in right-handed people but more often on the right in left-handed people, were considered primarily responsible for all these linguistic cognitive functions. This was mainly the result of studies of patients with brain injuries such as strokes. According to these studies, Broca's area seemed to be primarily responsible for speech production and Wernicke's area for speech comprehension. However, more recent research shows that this clear distinction does not exist.

After more detailed examinations, patients with Broca's-related speech disorders (expressive aphasia) usually also have difficulties understanding language, for example, differences in sentence structure and syntax. Therefore, it is now assumed that sentence structure and grammar are processed in the Broca's area – both for speaking and for understanding spoken language. The situation is similar for patients with damage to the Wernicke area (receptive aphasia), which, according to current understanding, may be a connection point between linguistic and semantic information. Patients with damage to the Wernicke area do not always understand words correctly when listening. They also mix up words when speaking, saying “pear” or “biting fruit” instead of “apple,” for example.

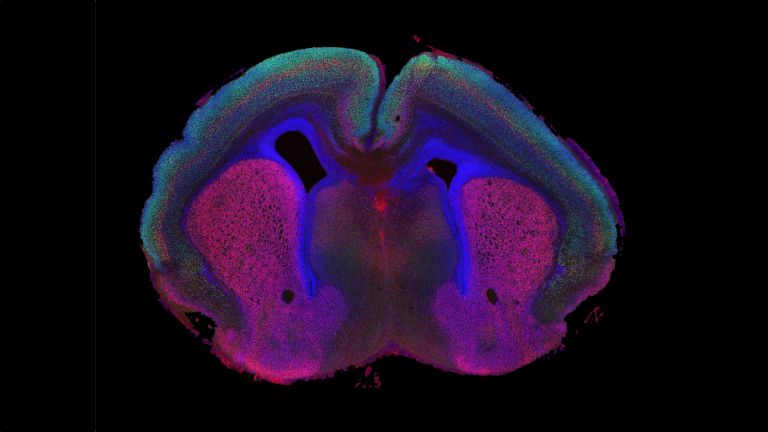

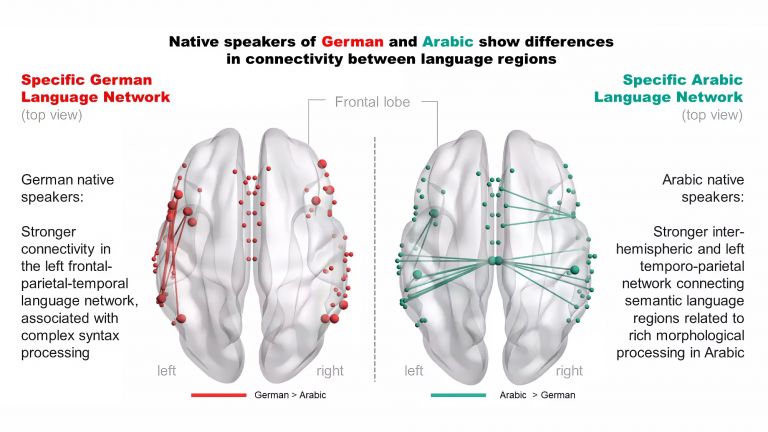

Not just unilateral activity

As a rule, most language processing takes place in the left hemisphere of the brain, but bilateral activity can also be observed for certain tasks. Studies of patients with injuries to the corpus callosum, which connects the two hemispheres of the brain, show that the right hemisphere is apparently necessary for processing acoustic features that span sounds, such as accent or intonation. A 2008 study by researchers at Berlin's Charité hospital indicates that cooperation between the cerebral cortex and the thalamus is necessary for recognizing sentence structures and content. In general, it is becoming increasingly clear that not only Broca's and Wernicke's areas are involved in language processing, but also other areas of the cerebral cortex and those below the cerebral cortex, including possibly the basal ganglia.

Empfohlene Artikel

From form to content

The path that language takes through the brain until it becomes conscious to the listener is surprising. According to linguist Angela Friederici from the Max Planck Institute for Human Cognitive and Brain Sciences, the first thing that is examined is not the meaning, but the grammar of a sentence. Form before content: within 200 milliseconds, the adult brain analyzes nouns, verbs, prepositions, and other grammatical subtleties. In children, this takes up to 350 milliseconds – an indication that grammar rules must be learned, but then become automatic. Only in a second phase, up to 400 milliseconds later, does the brain finally interpret the meaning of the words. In the third phase, the analysis phase after 600 milliseconds, the brain has finally reconciled the grammar and semantics of a sentence.

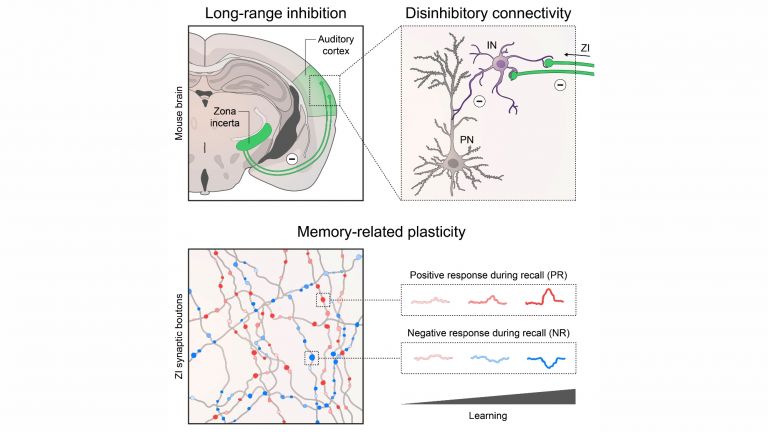

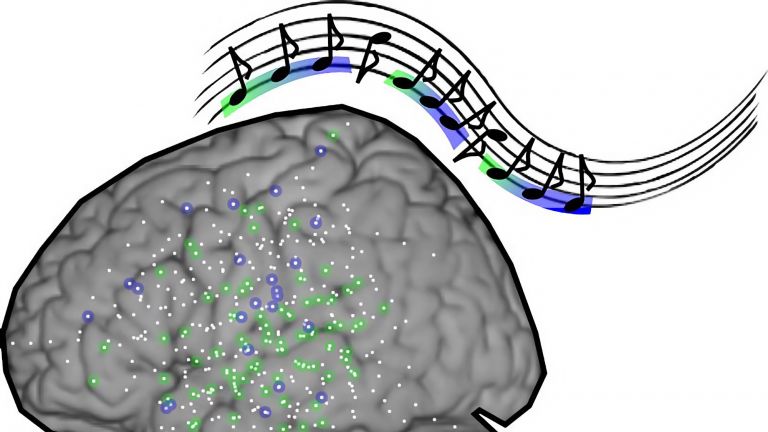

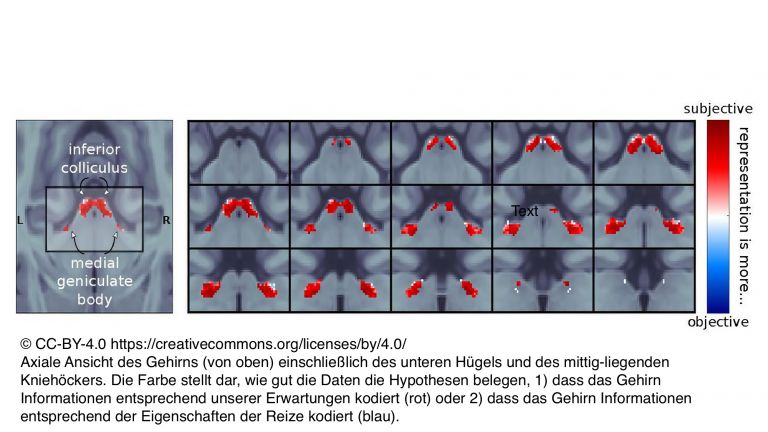

Distinguishing mere sounds from language

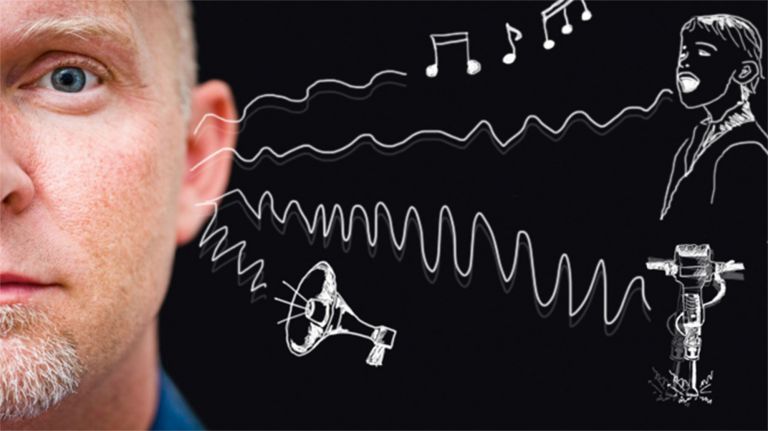

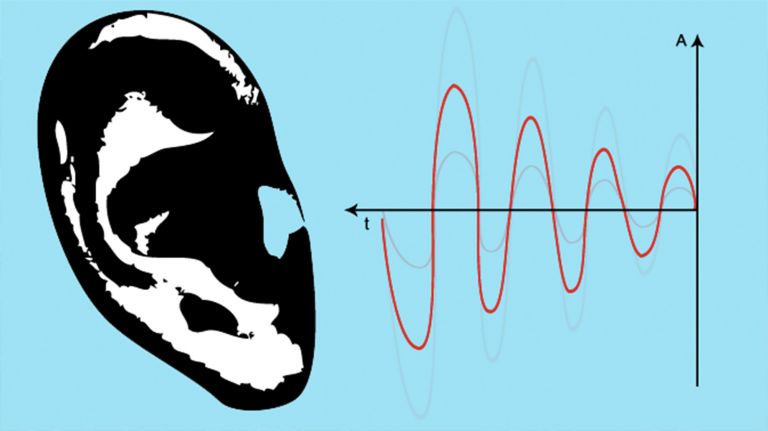

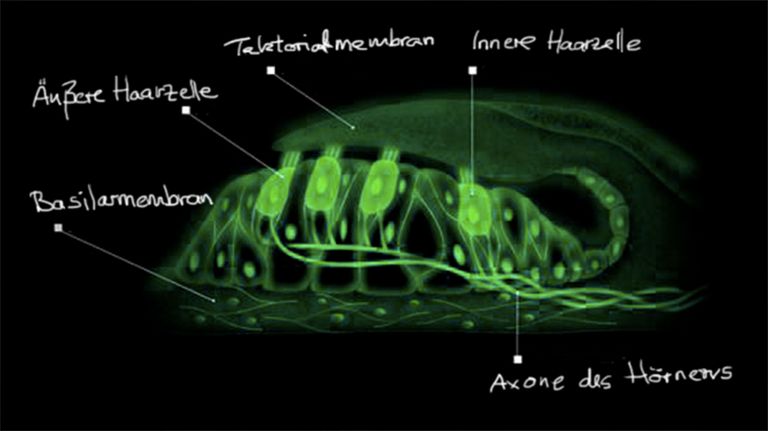

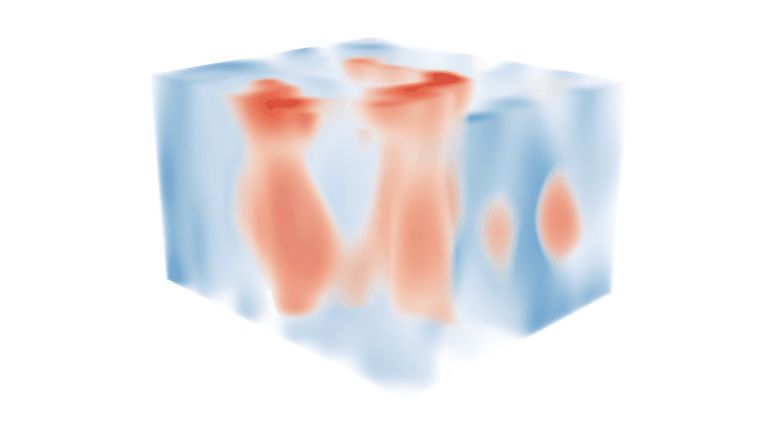

But how do parents recognize language in their child's babbling lip acrobatics and not just sounds? Apart from the parents' heightened expectations, what makes the difference between sound waves from the human vocal apparatus and those from musical instruments or cars? The cochlea in the inner ear apparently has a counterpart in the brain, similar to the retina of the eye. High frequencies are processed by different groups of neurons – “fields” – in the auditory cortex than lower frequencies.

And just as a jackhammer covers a characteristic frequency spectrum, human speech also occurs within a certain range: 80 Hz to 12 kHz, with the fundamental tone for men around 125 Hz, for women around twice that (250 Hz), and for children almost four times as high (440 Hz). For this reason alone, “Pa-Pa” is processed in part by different neurons than other sounds, be it music, noise, or just babbling.

First published on July 27, 2012

Last updated on October 13, 2023